DISQOVER DATA

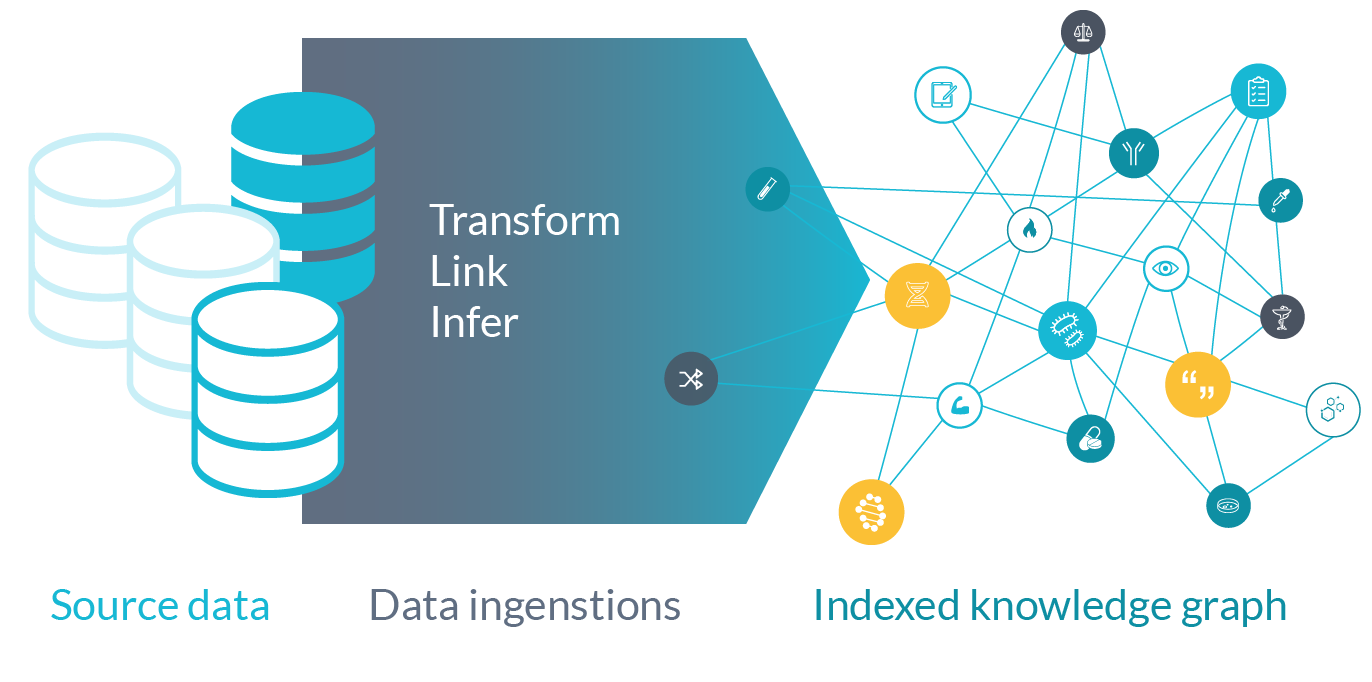

DISQOVER is equipped with a powerful, efficient, and intuitive Data Ingestion Engine, designed to empower domain experts. As a data scientist you can import, manipulate, link, and integrate your own data into DISQOVER, using an innovative visual pipeline environment.

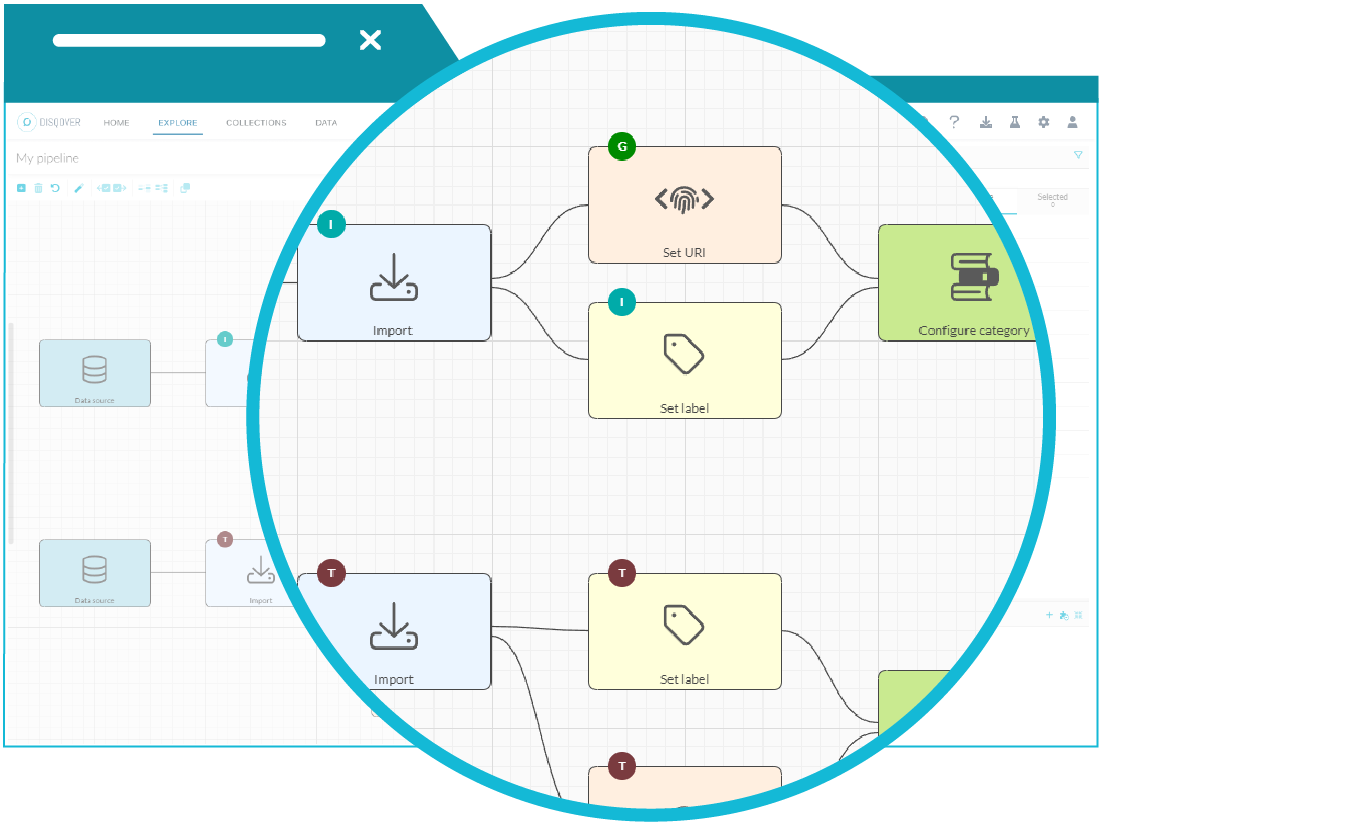

DISQOVER’s Data Ingestion Engine empowers domain experts and data wranglers with an intuitive visual interface that enables them to rapidly achieve value. When you are configuring the integration of your source data in DISQOVER, you can manage the data ingestion process by building a visual pipeline, using a wide range of powerful reusable components. There is no need to write extensive code, which means that, compared to a conventional approach relying on e.g. RDF SPARQL, fewer specialized skills are needed, and development time is reduced, while retaining the same level of power and flexibility.

A visual pipeline makes it easier to communicate the choices made during data integration, resulting in increased transparency and auditability, and reducing the risk of error. Stakeholders with only basic IT knowledge can understand, review, challenge, and contribute to the data integration process.

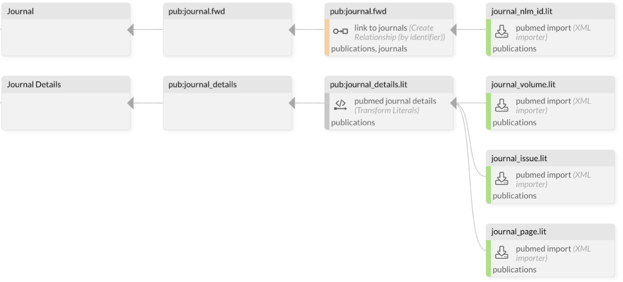

The Data Ingestion Engine is capable of tracking data dependencies throughout the entire pipeline. Thanks to this, you can see what source data field(s) contributed to every information field in the DISQOVER database. Conversely, you can also see every source data field that DISQOVER is contributing to.

© 2025 ONTOFORCE All right reserved