Downloading

The downloading stage checks data sources for changes in structure or format. Validation ensures that the downloaded data matches expectations.

.png)

Knowledge graph

In the ever-evolving landscape of pharmaceutical research and development, the quest for innovative therapies and drugs has become paramount. Biopharma firms need to speed up drug discovery by using lots of data from research, manufacturing, experiments, trials, and more. To address this challenge and revolutionize drug discovery, the adoption of a knowledge graph offers a transformative solution.

According to Deloitte, the leading 20 pharmaceutical firms expended a massive $139 billion on research and development in 2022. Despite this substantial investment, the rate of new drug approvals has slowed considerably in recent years. This disparity underscores the need for a paradigm shift in drug discovery methodologies, especially in underserved therapeutic areas.

Automating drug discovery workflows at research sites brings hope and aligns with the Pharma 4.0 vision, which emphasizes outcomes tailored to clinical, process development, and manufacturing data aggregation.

.png)

Biotech and pharma companies collect a lot of data over time. This data includes information about molecules, lab experiments, and clinical trials.

However, researchers often find it difficult to access and use this data effectively because it is stored in different places. The painstaking process of connecting and consolidating this data can take weeks or even months, impeding the drug discovery journey.

The solution to fragmented and disconnected data sources lies in making use of a knowledge graph.

Creating a knowledge graph involves two important parts: the user interface and the data storage system.

ETL processes are used to convert and combine data efficiently. The data is then prepared for easy access. Monitoring and notifications are carefully managed to make sure the data flows smoothly.

The knowledge graph can do more with predictive models. These models use advanced techniques to do different tasks, like checking toxicity or recommending similar drugs. These tasks make the knowledge graph more impactful and give researchers more useful information.

The knowledge graph is also useful for searching. It helps users find specific information and important details. This information can be used to make smart choices, like adjusting experiments or improving drug production using past data.

One critical aspect of building and maintaining a knowledge graph is data licensing.

Many sources provide data under various licenses, and it's crucial to understand the licensing terms and ensure that the data is available for use.

Moreover, maintaining data licenses is not a one-time task. It requires regular reviews because sources and their licenses can change over time. This ongoing process ensures that the knowledge graph remains compliant with the latest licensing terms.

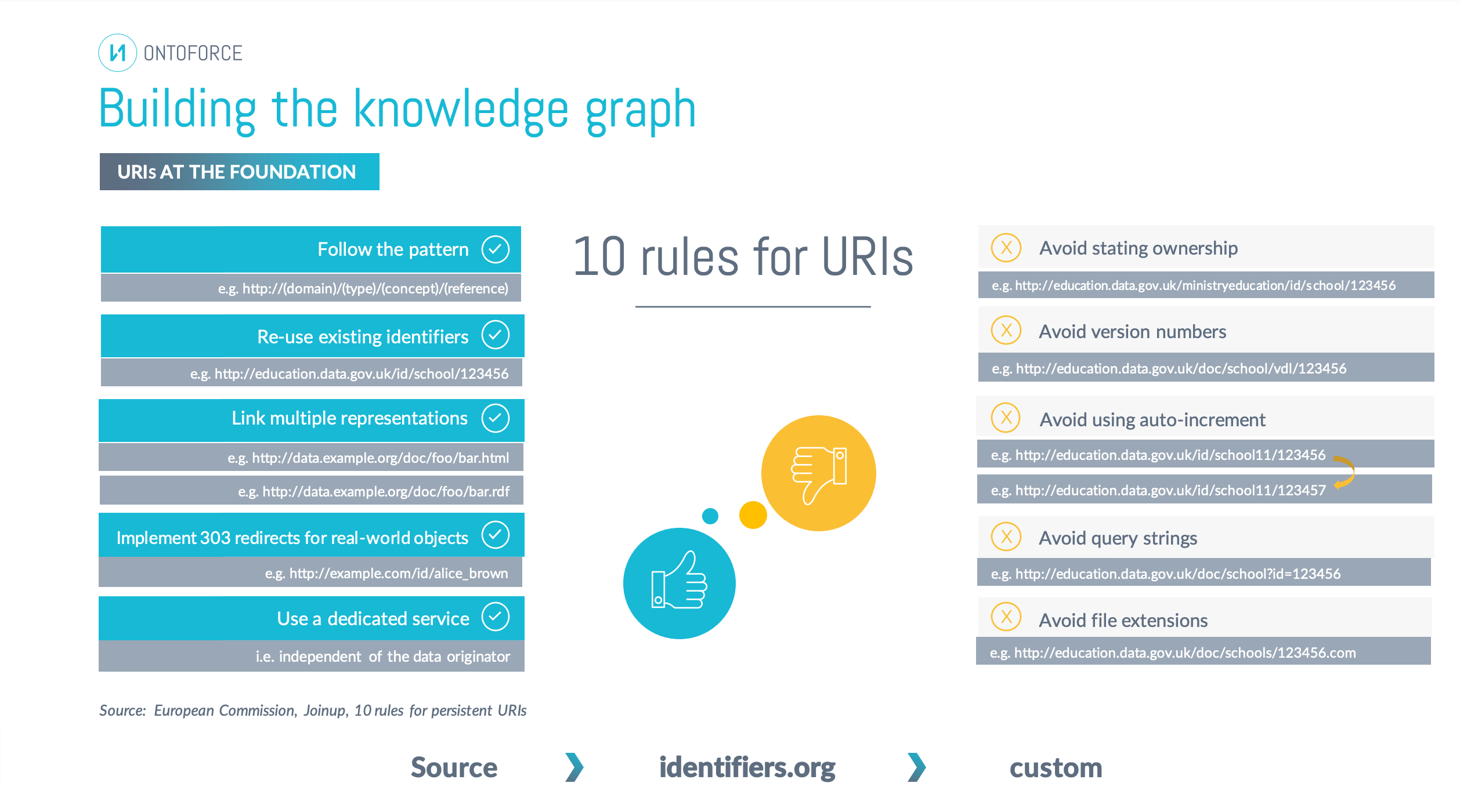

After integrating data sources into the knowledge graph, the first step is to create unique URIs for entities.

The choice of URI structure depends on various factors, including the presence of source-specific UI schemas.

As the knowledge graph grows with data from multiple sources, the challenge of equivalency matching arises.

Equivalency matching means finding entities in the graph that represent the same concept, even if they're from different sources.

However, modularization also introduces the challenge of reintegrating data modules to ensure that all links within the global knowledge graph are established correctly. This step involves stitching together the modularized data.

Building and maintaining a knowledge graph with data from numerous sources requires a scalable approach.

Modularization provides several advantages, including:

The downloading stage checks data sources for changes in structure or format. Validation ensures that the downloaded data matches expectations.

During the modularization stage, we perform quality control checks on each module, which include link verification and data normalization.

During the integration stage, we combine data domains into the global knowledge graph and conduct quality control checks. A regression test compares the current data with previous versions to identify any significant changes.

Collaboration plays a vital role in maintaining data quality and resolving issues.

When issues arise due to source-specific changes, there are two primary approaches for resolution:

Building and maintaining a knowledge graph for drug discovery is a complex but highly rewarding endeavor.

Achieving success in knowledge graph construction and maintenance hinges on elements such as data licensing compliance, the creation of globally unique URIs, equivalency matching, modularization for scalability, data quality control, and collaborative issue resolution.

The pharmaceutical industry can speed up finding new drugs and promote innovation in the search for new treatments using knowledge graphs.

© 2025 ONTOFORCE All right reserved